Marketing Data Science

- 曹修源

- Application, Data analytics

- July 29, 2024

Table of Contents

本堂課程教材

20240221

(1)Service Quality Scale (SERVQUAL) Parasuraman,A.;Berry,Leonard L.;Zeithaml,Valarie A., “SERVQUAL: A Multiple-Item Scale For Measuring Consumer Perceptions of Service Quality”, Journal of Retailing, 1988, 64, 1, 12-40.

服務品質衡量量表為SERVQUAL和SERVPERF最為普遍。兩者當中,又以SERVQUAL量表最常被使用。 SERVQUAL量表是由Parasuraman, Berry和Zeitham三位學者於1988年提出,用於衡量服務品質,也是後續許多學者研究基礎。

針對服務品質概論(PZB模式)中十種屬性加以抽樣、驗證及重新定義成五個構面: 可靠性(Reliability)是能夠準確可靠地執行承諾的服務; 反應性(Responsiveness)是願意幫助顧客並即時提供服務; 同理心(Empathy)是關注給予客戶關懷和客製化服務; 保證性(Assurance)是員工的知識和禮貌及使人產生信任和信心的能力; 有形性(Tangibility)是實際設施、旅店設備以及服務人員等。

(2) 服務品質網路問卷設計,範例 https://forms.gle/FtoEuFZGsyxzwqt37 https://forms.gle/ZdHJeuh2xYm2J7YG6

圖書館服務品質問卷範例 LibQual

旅宿業館服務品質問卷範例 LQI

(3) Importance - Performacne Analysis Martilla, J.A. and James, J.C. (1977) Importance-Performance Analysis. Journal of Marketing, 41, 77-79. 如何分析 ? ( 範例NCHUMTSQ_OK.xlsx)

python程式碼

import pandas as pd

import numpy as np

from plotnine import *

import matplotlib.pyplot as plt

# 讀取 Excel 文件

data = pd.read_excel("NCHUMTSQ.xlsx", sheet_name=0)

# 提取奇數列和偶數列數據

col_odd = np.arange(len(data.columns)) % 2 == 0

data_col_odd = data.iloc[:, col_odd]

data_col_satisfaction = data_col_odd.iloc[:, 1:]

data_col_importance = data.iloc[:, ~col_odd]

# Calculate the mean of each column in the satisfaction DataFrame

satisfaction_df_mean = data_col_satisfaction.mean(axis=0)

# Slice the means for different dimensions

satisfaction_df_Tangibility = satisfaction_df_mean[0:4]

satisfaction_df_Assurance = satisfaction_df_mean[4:9]

satisfaction_df_Empathy = satisfaction_df_mean[9:14]

satisfaction_df_Responsiveness = satisfaction_df_mean[14:18]

satisfaction_df_Reliability = satisfaction_df_mean[18:22]

# Calculate the mean for each dimension

satisfaction_df_dim = {

'Tangibility': satisfaction_df_Tangibility.mean(),

'Assurance': satisfaction_df_Assurance.mean(),

'Empathy': satisfaction_df_Empathy.mean(),

'Responsiveness': satisfaction_df_Responsiveness.mean(),

'Reliability': satisfaction_df_Reliability.mean()

}

# Calculate the mean of each column in the importance DataFrame

importance_df_mean = data_col_importance.mean(axis=0)

# Slice the means for different dimensions

importance_df_Tangibility = importance_df_mean[0:4]

importance_df_Assurance = importance_df_mean[4:9]

importance_df_Empathy = importance_df_mean[9:14]

importance_df_Responsiveness = importance_df_mean[14:18]

importance_df_Reliability = importance_df_mean[18:22]

# Calculate the mean for each dimension

importance_df_dim = {

'Tangibility': importance_df_Tangibility.mean(),

'Assurance': importance_df_Assurance.mean(),

'Empathy': importance_df_Empathy.mean(),

'Responsiveness': importance_df_Responsiveness.mean(),

'Reliability': importance_df_Reliability.mean()

}

Attribute_df_dim = {

'Tangibility': 'Tangibility',

'Assurance': 'Assurance',

'Empathy': 'Empathy',

'Responsiveness': 'Responsiveness',

'Reliability': 'Reliability'

}

# Print the results

print(satisfaction_df_dim)

print(importance_df_dim)

# 創建 IPA 數據框

#ipa_df = pd.DataFrame({'importance_df_dim': importance_df_dim, 'satisfaction_df_dim': satisfaction_df_dim})

df = pd.DataFrame(

{ 'Attribute': Attribute_df_dim,

'Importance': importance_df_dim,

'Performance': satisfaction_df_dim})

# Plot

fig, ax = plt.subplots()

#df.plot(kind='scatter', x='Importance', y='Performance', ax=ax)

df.plot(kind='scatter', x='Performance', y='Importance', ax=ax)

# Label points in the scatter plot

for i, txt in enumerate(df.Attribute):

ax.annotate(txt, (df.Performance[i],df.Importance[i] ))

# Set chart title and labels

ax.set_title('Importance-Performance Analysis')

ax.set_xlabel('Performance')

ax.set_ylabel('Importance')

# Draw mean lines for importance and performance

mean_importance = df['Importance'].mean()

mean_performance = df['Performance'].mean()

ax.axvline(x=mean_performance, coloR'r', linestyle='--')

ax.axhline(y=mean_importance, coloR'r', linestyle='--')

# Show plot

plt.show()

R語言程式碼

#-----------------------------------------------

#install packages required

#load package via library()

#-----------------------------------------------

library(xlsx)

library(dplyr)

library(webshot)

library(htmlwidgets)

library(igraph)

library(ggraph)

library(widyr)

library(ggplot2)

library(ggrepel)

library(plotly)

library(showtext)

#------------set working directory ------------

#setwd("c://Koong//csv//SERVQUAL_IPA")

#-----------------------------------------------

#-----Read the first worksheet in the file input.xlsx.

data <- read.xlsx("NCHUMTSQ.xlsx", sheetIndex = 1)

#---------------------------------------------------

#head(data)

#head(data[1:3,1:2])

#colnames(data)[2]

#---------------------------------------------------

col_odd <- seq_len(ncol(data)) %% 2 # Create column indicator

col_odd # Print column indicator

#oddfiled <- col_odd == 1

data_col_odd <- data[ , col_odd == 1] # Subset odd columns

data_col_odd

# Print odd columns

data_col_satisfaction <- data_col_odd[,-1]

data_col_satisfaction

data_col_importance <- data[ , col_odd == 0] # Subset even columns

data_col_importance # Print even columns

satisfaction_df <- data.frame(data_col_satisfaction)

satisfaction_df_mean <- colMeans(satisfaction_df)

#satisfaction_df_mean <- colMeans(satisfaction_df[sapply(satisfaction_df, is.numeric)])

satisfaction_df_Tangibility <- satisfaction_df_mean[1:4]

satisfaction_df_Assurance <- satisfaction_df_mean[5:9]

satisfaction_df_Empathy <- satisfaction_df_mean[10:14]

satisfaction_df_Responseness <- satisfaction_df_mean[15:18]

satisfaction_df_Reliability <- satisfaction_df_mean[19:22]

satisfaction_df_dim <-

c(Tangibility=mean(satisfaction_df_Tangibility),

Assurance=mean(satisfaction_df_Assurance),

Empathy=mean(satisfaction_df_Empathy),

Responseness=mean(satisfaction_df_Responseness),

Reliability=mean(satisfaction_df_Reliability))

#-------Commonly used statstics function -------------------

#mean(duration)

#median(duration)

#quantile(duration)

#boxplot(duration, horizontal=TRUE)

#var(duration)

#sd(duration)

#https://methodenlehre.github.io/SGSCLM-R-course/index.html

#----------------------------------------------------------

importance_df <- data.frame(data_col_importance)

importance_df_mean <- colMeans(importance_df)

#importance_df_mean <- colMeans(importance_df[sapply(importance_df, is.numeric)])

importance_df_Tangibility <- importance_df_mean[1:4]

importance_df_Assurance <- importance_df_mean[5:9]

importance_df_Empathy <- importance_df_mean[10:14]

importance_df_Responseness <- importance_df_mean[15:18]

importance_df_Reliability <- importance_df_mean[19:22]

importance_df_dim <-

c(Tangibility=mean(importance_df_Tangibility),

Assurance=mean(importance_df_Assurance),

Empathy=mean(importance_df_Empathy),

Responseness=mean(importance_df_Responseness),

Reliability=mean(importance_df_Reliability))

#---------------------------------------------------

satisfaction_df_dim

importance_df_dim

#---------------------------------------------------

ipa_df <- data.frame(importance_df_dim,satisfaction_df_dim)

ipa_df

ipa <- NULL

ipa <- ipa_df %>%

mutate( cmove = importance_df_dim - mean(importance_df_dim) ) %>%

mutate( smove = satisfaction_df_dim - mean(satisfaction_df_dim )) %>%

data.frame()

#--------IPA1 GGplot---------------------------------------------------------

empty_theme <- theme(

plot.background = element_blank(),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank(),

axis.line = element_blank(),

axis.ticks = element_blank(),

axis.text.y = element_text(angle = 90))

#------------------------------------------------

#ggplot(<????>)+ aes(<?y?��>) #?y?��

# ?]?m?e??

# geom_<?X???ϥ?????>( # ?e?W?X???ϥ?

# https://ggplot2-book.org/index.html

#------------------------------------------------

ggplot(ipa, aes(x = smove, y = cmove, label = row.names(ipa))) +

empty_theme +

theme(panel.border = element_rect(colour = "lightgrey", fill=NA, size=1))+

labs(title = "IPA analysis", y = "Counts of Dimension Sentences", x = "Sentiment Score of Dimension") +

geom_vline(xintercept = 0, colour = "lightgrey", size=0.5) +

geom_hline(yintercept = 0, colour = "lightgrey", size=0.5) +

geom_point( size = 0.5)+

geom_label_repel(size = 4, fill = "deepskyblue", colour = "black", min.segment.length = unit(0, "lines"))

#plot

#dv.off()

20240229

IPA in R (文字篇) - FTTA (From Text to Action, From Mining to Meaning)

1.下載 noteapd++ https://notepad-plus-plus.org/downloads/

2.中文情緒字典 TLSSD(Tsao, Lin, and Su SD) TLSSD.csv

3.中文停止詞 stopwordsutf8.csv

4.Jeiba_Tsao_SERVQ_IPA_0200_0312.R https://www.sidrlab.net/

5.文字集 3J.xlsx

6.構面特徵關鍵字集: 3JDim.xlsx 7.參考文獻:Tsao ,Hsiu-Yuan, Colin Campbell, Sean Sands, Alexis Mavrommatis (2022),” From mining to meaning: How B2B marketers can leverage text to inform strategy”, Industrial Marketing Management , 106, pp.90-98. (SSCI, Impact Factor = 8.89, rank in Management (Q1))

下載FTTA_R.ZIP 可得到2.到6.

Python程式碼

import jieba

import pandas as pd

import nltk

from nltk.tokenize import sent_tokenize

from snownlp import SnowNLP

import csv

from wordcloud import WordCloud

import matplotlib.pyplot as plt

# 下載NLTK資源

nltk.download('punkt')

# Function to load sentiment dictionary from file

def load_sentiment_dictionary(filename):

sentiment_dict = {}

with open(filename, 'r', newline='', encoding='utf-8') as csvfile:

reader = csv.reader(csvfile)

for row in reader:

word, score = row

sentiment_dict[word] = int(score)

return sentiment_dict

# Load sentiment dictionary from file

sentiment_dict = load_sentiment_dictionary('TLSSD.csv')

import re

def split_chinese_sentences(text):

# 定义中文标点符号的正则表达式模式

chinese_punctuation_pattern = r'[!?。;]'

# 使用正则表达式模式分割文本

sentences = re.split(chinese_punctuation_pattern, text)

# 移除空字符串

sentences = [sentence.strip() for sentence in sentences if sentence.strip()]

return sentences

# 拆解中文句子

#sentences = split_chinese_sentences(text)

# 計算句子的情緒分數

def calculate_emotion_score(sentence, sentiment_dict):

words = jieba.lcut(sentence)

score = []

avg_sentence_scores = []

for word in words:

if word in sentiment_dict:

score.append(sentiment_dict[word])

if score:

avg_sentiment_score = sum(score) / len(score)

else:

avg_sentiment_score = 0

avg_sentence_scores.append(avg_sentiment_score)

return avg_sentence_scores

# 定義函式來處理文件

def process_file(file_path):

# 儲存行數、句子序號、句子和情緒分數的列表

line_numbers = []

sentence_numbers = []

sentences = []

sentiment_scores = []

# Initialize a list to store average sentence scores

emotion_scores = []

# 開啟文件並逐行處理

with open(file_path, 'r', encoding='utf-8') as file:

for line_number, line in enumerate(file, start=1):

# 將每一行拆解成句子

#line_sentences = sent_tokenize(line.strip())

line_sentences = split_chinese_sentences(line)

# 記錄每個句子的行數、句子序號、句子本身和情緒分數

for sentence_number, sentence in enumerate(line_sentences, start=1):

line_numbers.append(line_number)

sentence_numbers.append(sentence_number)

sentences.append(sentence)

# 使用SnowNLP進行情緒分析

#sentiment_score = SnowNLP(sentence).sentiments

#sentiment_scores.append(sentiment_score)

emotion_scores.append(calculate_emotion_score(sentence, sentiment_dict))

# 將行數、句子序號、句子和情緒分數記錄成DataFrame

df = pd.DataFrame({'Line Number': line_numbers, 'Sentence Number': sentence_numbers, 'Sentence': sentences, 'Sentiment Score': emotion_scores})

return df

# 調用函式並讀取中文文字檔

#file_path = 'shopify.txt' # 請將檔案路徑替換為你的文字檔路徑

file_path = '3J.txt' # 請將檔案路徑替換為你的文字檔路徑

text_dataframe = process_file(file_path)

# 顯示DataFrame

print(text_dataframe)

#---------------------------------------------------------------------------

# 根據關鍵字判斷構面的函數

def classify_aspect(sentence, aspect_keywords):

words = jieba.lcut(sentence)

for word in words:

for aspect, keywords in aspect_keywords.items():

if word in keywords:

return aspect

return 'unknown'

# 從文件中讀取不同構面特徵關鍵字

def load_aspect_keywords(file_path):

aspect_keywords = {}

with open(file_path, 'r', encoding='utf-8') as file:

for line in file:

aspect, *keywords = line.strip().split()

aspect_keywords[aspect] = keywords

return aspect_keywords

# 測試句子

#test_sentences = [

# "這家餐廳的菜好吃,服務也很好。",

# "這本書的內容非常有趣。",

# "這個產品的品質不怎麼樣。",

# "今天的天氣真糟糕,讓人感到很沮喪。",

# "這個活動很一般,沒有特別的感覺。"

#]

test_sentences = text_dataframe

# 文件路徑

keywords_file_path = '3JDims.txt'

# 加載關鍵字

aspect_keywords = load_aspect_keywords(keywords_file_path)

aspects = []

sentences = []

# 進行構面分類並標註的主程式

for sentence in text_dataframe['Sentence']:

aspect = classify_aspect(sentence, aspect_keywords)

sentences.append(sentence)

aspects.append(aspect)

print(f"句子: '{sentence}',構面: {aspect}")

text_dataframe['aspect'] = aspects

df = text_dataframe

#view data types for each column

#df.dtypes

sentiment_sores = []

for i in range(len(df)):

sentiment_sores.append(df.iloc[i,3][0])

df['Sentiment Score'] = sentiment_sores

#------------------------------------------

positive_df = df[df['Sentiment Score'] > 0]

negative_df = df[df['Sentiment Score'] < 0]

#------------------------------------------

text=positive_df['Sentence'].values.tolist()

# 使用jieba進行分詞

text = ' '.join(text)

wordlist = jieba.cut(text, cut_all=False)

positive_process_text = ' '.join(wordlist)

text=negative_df['Sentence'].values.tolist()

# 使用jieba進行分詞

text = ' '.join(text)

wordlist = jieba.cut(text, cut_all=False)

negative_process_text = ' '.join(wordlist)

# 加载停用词列表

#-------------------------------------------------------

# WordCloud

#-------------------------------------------------------

# 文本预处理函数,去除停用词

def generate_wordcloud(text):

# 去除停用词后的文本

stopwords = set()

with open('stopwordsutf8.txt', 'r', encoding='utf-8') as f:

for line in f:

stopwords.add(line.strip())

words = jieba.cut(text)

filtered_words = [word for word in words if word not in stopwords]

processed_text = ' '.join(filtered_words)

#wget https://raw.githubusercontent.com/victorgau/wordcloud/master/SourceHanSansTW-Regular.otf

font_path='SourceHanSansTW-Regular.otf'

# 創建文字雲

wordcloud = WordCloud(font_path='SourceHanSansTW-Regular.otf', background_coloR'white').generate(processed_text)

# 使用matplotlib展示文字雲

plt.imshow(wordcloud, interpolation='bilinear')

plt.axis("off")

plt.show()

pass

# 去除停用词后的文本

#positive_processed_text = remove_stopwords(positive_wordlist_space_split)

generate_wordcloud(positive_process_text)

#negative_processed_text = remove_stopwords(negative_wordlist_space_split)

generate_wordcloud(negative_process_text)

#-------------------------------------------------------

# IPA Matrix

#-------------------------------------------------------

def calculate_value_counts_and_mean(data_frame, count_column, value_column):

"""

计算DataFrame中某个列的值出现的次数以及相应的另一个列的平均值,并将结果转换为DataFrame

:param data_frame: DataFrame对象

:param count_column: 用于计算值出现次数的列名

:param value_column: 用于计算平均值的列名

:return: 返回一个DataFrame,包含计数、平均值和Attribute列,索引为不同的值

"""

result = {}

grouped = data_frame.groupby(count_column)

for name, group in grouped:

value_counts = group[value_column].count()

mean_value = group[value_column].mean()

result[name] = (value_counts, mean_value)

result_df = pd.DataFrame.from_dict(result, orient='index', columns=['Importance', 'Sentiment']).reset_index()

result_df.rename(columns={'index': 'Attribute'}, inplace=True)

return result_df

# 要计算的列名

count_column = 'aspect'

value_column = 'Sentiment Score'

# 调用函数计算列值出现次数和对应的平均值,并转换为DataFrame

result_df = calculate_value_counts_and_mean(df, count_column, value_column)

print(result_df)

#-------------------------------------------------------

# Plot IPA Matrix

#-------------------------------------------------------

# Plot

df = result_df[result_df['Attribute'] != 'unknown']

fig, ax = plt.subplots()

#df.plot(kind='scatter', x='Importance', y='Performance', ax=ax)

df.plot(kind='scatter', x='Sentiment', y='Importance', ax=ax)

# Label points in the scatter plot

for i, txt in enumerate(df.Attribute):

ax.annotate(txt, (df.Sentiment[i],df.Importance[i] ))

# Set chart title and labels

ax.set_title('FTTA Analysis')

ax.set_xlabel('Sentiment')

ax.set_ylabel('Importance')

# Draw mean lines for importance and performance

mean_importance = df['Importance'].mean()

mean_performance = df['Sentiment'].mean()

ax.axvline(x=mean_performance, coloR'r', linestyle='--')

ax.axhline(y=mean_importance, coloR'r', linestyle='--')

# Show plot

plt.show()

R語言程式碼

Sys.setlocale(category = "LC_ALL", locale = "C.UTF-8")

library(data.table)

library(jiebaR)

library(wordcloud)

library(stringr)

library(tm)

library(dplyr)

library(tidyverse)

library(tidytext)

library(purrr)

library(reshape2)

library(wordcloud)

library(RColorBrewer)

library(tmcn)

library(wordcloud2)

library(webshot)

library(htmlwidgets)

library(igraph)

library(ggraph)

library(widyr)

library(ggplot2)

library(ggrepel)

library(xlsx)

#--------------- test code -------------

filename <- '3J.xlsx'

#--------------- test code -------------

TripText = NULL

#tenlong <- fread(file=filename,sep = "," ,encoding="UTF-8",headeRFALSE, stringsAsFactors=FALSE)

tenlong <- read.xlsx(filename, sheetIndex = 1)

colnames(tenlong) <- c("text")

tenlong <- tenlong %>%

mutate(tenlong,line=c(1:nrow(tenlong))) %>%

relocate(line)

tenlong <- tenlong[which(nchar(tenlong$text) >2),]

colnames(tenlong) <- c("line","text")

#=============================================================================

# 斷句 (稍後解釋)

#=============================================================================

AZDF <- tenlong

test_text <- NULL

test_text <- AZDF

test_text$text <- gsub(pattern = '。',replacement = '.',test_text$text)

test_text$text <- gsub(pattern = ',',replacement = ',',test_text$text)

test_text$text <- gsub(pattern = '!',replacement = '.',test_text$text)

test_text$text <- gsub(pattern = ';',replacement = '.',test_text$text)

test_text$text <- gsub(pattern = '?',replacement = '.',test_text$text)

test_text$text

split_into_sentences <- function(text){

#This is a function built off this Python solution that allows some flexibility in that the lists of prefixes, suffixes, etc. can be modified to your specific text. It's definitely not perfect, but could be useful with the right text.

caps = "([A-Z])"

prefixes = "(Mr|St|Mrs|Ms|Dr|Prof|Capt|Cpt|Lt|Mt)\\."

suffixes = "(Inc|Ltd|Jr|Sr|Co)"

acronyms = "([A-Z][.][A-Z][.](?:[A-Z][.])?)"

starters = "(Mr|Mrs|Ms|Dr|He\\s|She\\s|It\\s|They\\s|Their\\s|Our\\s|We\\s|But\\s|However\\s|That\\s|This\\s|Wherever)"

websites = "\\.(com|edu|gov|io|me|net|org)"

digits = "([0-9])"

text = gsub("\n|\r\n"," ", text)

text = gsub(prefixes, "\\1<prd>", text)

text = gsub(websites, "<prd>\\1", text)

text = gsub('www\\.', "www<prd>", text)

text = gsub("Ph.D.","Ph<prd>D<prd>", text)

text = gsub(paste0("\\s", caps, "\\. "), " \\1<prd> ", text)

text = gsub(paste0(acronyms, " ", starters), "\\1<stop> \\2", text)

text = gsub(paste0(caps, "\\.", caps, "\\.", caps, "\\."), "\\1<prd>\\2<prd>\\3<prd>", text)

text = gsub(paste0(caps, "\\.", caps, "\\."), "\\1<prd>\\2<prd>", text)

text = gsub(paste0(" ", suffixes, "\\. ", starters), " \\1<stop> \\2", text)

text = gsub(paste0(" ", suffixes, "\\."), " \\1<prd>", text)

text = gsub(paste0(" ", caps, "\\."), " \\1<prd>",text)

text = gsub(paste0(digits, "\\.", digits), "\\1<prd>\\2", text)

text = gsub("...", "<prd><prd><prd>", text, fixed = TRUE)

text = gsub('\\.”', '”.', text)

text = gsub('\\."', '\".', text)

text = gsub('\\!"', '"!', text)

text = gsub('\\?"', '"?', text)

text = gsub('\\.', '.<stop>', text)

text = gsub('\\?', '?<stop>', text)

text = gsub('\\!', '!<stop>', text)

text = gsub('<prd>', '.', text)

sentence = strsplit(text, "<stop>\\s*")

return(sentence)

}

df_sentences <- NULL

for (i in 1: nrow(AZDF)) {

df_sentences_t <- NULL

sentences <- split_into_sentences(test_text$text[i])

names(sentences) <- 'sentence'

df_sentences_t <- dplyr::bind_rows(sentences)

#chapterno <- rep(test_text$chapter[i],times=nrow(df_sentences_t))

lineno <- rep(test_text$line[i],times=nrow(df_sentences_t))

#dateno <- rep(test_text$t1date[i],times=nrow(df_sentences_t))

sentenceno <- c(1:nrow(df_sentences_t))

df_sentences_t <- dplyr::bind_cols(lineno, sentenceno,df_sentences_t)

colnames(df_sentences_t) <- c("line","sentenceno","text")

df_sentences <- dplyr::bind_rows(df_sentences,df_sentences_t)

}

AZDF01 <- df_sentences

AZDF01$text=gsub("\\.","",AZDF01$text)

AZDF02 <- AZDF01[which(nchar(AZDF01$text) >1),]

TripText <- AZDF02[,-2]

TripText01 <- TripText$line

TripText02 <- TripText$text

#=============================================================================

# 中文情緒字典 TLSSD(Tsao & Lin & Su) 情緒字典

#=============================================================================

afinn_list <- fread(file='TLSSD.csv',encoding="UTF-8",headeRFALSE, stringsAsFactors=FALSE)

names(afinn_list) <- c('word', 'score')

afinn_list$word <- tolower(afinn_list$word)

#------------- get_noun ----------------------

get_noun = function(x){

stopifnot(inherits(x,"character"))

index = names(x) %in% c("n","nr","nr1","nr2","nrj","nrf","ns","nsf","nt","nz",

"nl","ng",

"a","ad","an","ag","al",

"v","vh","vg","vd","vn","vi*","vq*",

"d","x","b")

x[index]

}

#---------------tokenizer--------------------

chi_tokenizerN <- function (t) {

lapply(t, function(x){

tokens <- segment(x, jieba_tokenizerN)

tokens <- get_noun(tokens[nchar(tokens) > 1])

#tokens <- tokens[nchar(tokens) > 1]

return(tokens)

})

}

#---------------user define stop words ------

swords <- fread(file='stopwordsutf8.csv',encoding="UTF-8",headeRFALSE, stringsAsFactors=FALSE)

stopwords <- data_frame(word=swords$V1)

# --------------------Jieba TAG and User Define Dict -----

#jieba_tokenizer = worker(type="tag", user = "three_kingdoms_lexicon.traditional.dict")

#jieba_tokenizer = worker(user = "three_kingdoms_lexicon.traditional.dict")

#jieba_tokenizer = worker("tag",write = "NOFILE")

#jieba_tokenizer = worker(write = "NOFILE",user = "tenlong8_user_dict.utf8")

#jieba_tokenizer = worker()

#------------------------------------------------------------

#jieba_tokenizer = worker("tag",write = "NOFILE")

jieba_tokenizerN = worker(type="tag")

#---------------- unnest -------------------------------

new_user_word(jieba_tokenizerN,

c("蔡英文", "韓國瑜","國安五法","中共代理人法",

"李登輝","馬英九","蔣經國","陳水扁","柯文哲",

"王美花","雞排","郭董","郭台銘","缺電","設備",

"台電","限電","電力","三接","下台"

))

tokens <- AZDF02 %>%

unnest_tokens(word, text, token = chi_tokenizerN) %>%

anti_join(stopwords)

text_line <- tokens$line

text_sentenceno <- tokens$sentenceno

text_word <- tokens$word

text_dataset <- data_frame(line=text_line,sentenceno=tokens$sentenceno,word=text_word )

#------------------ sentiment Analysis -------------------------------

#------------------calaulate score of sentiment ---------------------

afinn_score_df <- text_dataset %>%

inner_join(afinn_list,by="word") %>%

group_by(line) %>%

arrange(line)

afinn_score_df

sentiment_score_df_sentence <- afinn_score_df %>%

group_by(line,sentenceno) %>%

arrange(line) %>%

summarise(sentiment=sum(as.numeric(score)) )

sentiment_score_df_sentence

sentiment_score_df_sentence %>%

summarise(sscore =mean(sentiment))

sentiment_score_df_sentence %>%

ungroup() %>%

summarise(sscore =mean(sentiment))

#------------------ Aspect / Dimensional ?i??-------------------------

positive_lines_df <- sentiment_score_df_sentence %>%

filter(sentiment > 0 ) %>%

left_join(text_dataset, by=c("line","sentenceno"))

positive_lines_df

negative_lines_df <- sentiment_score_df_sentence %>%

filter(sentiment < 0) %>%

left_join(text_dataset,by=c("line","sentenceno"))

negative_lines_df

sentiment_words_df <- rbind(positive_lines_df,negative_lines_df)

sentiment_words_df

#------------------ restore sentences with score of sentiment ------

positive_lines_df_sentences <-

unique(positive_lines_df[,1:3]) %>%

left_join(AZDF02,by=c("line","sentenceno")) %>%

left_join(sentiment_score_df_sentence,by=c("line","sentenceno"))

positive_lines_df_sentences

negative_lines_df_sentences <-

unique(negative_lines_df[,1:3]) %>%

left_join(AZDF02,by=c("line","sentenceno")) %>%

left_join(sentiment_score_df_sentence,by=c("line","sentenceno"))

negative_lines_df_sentences

sentiment_line_df_sentences <- rbind(positive_lines_df_sentences,negative_lines_df_sentences)

#--------------------------------------------------------

#---------------- frequency/counts of words------------

positive_tokens_count <- positive_lines_df %>%

anti_join(stopwords) %>%

#filter(chapter == cpt) %>%

group_by(word) %>%

summarise(n = n()) %>%

filter(n > 1) %>%

arrange(desc(n))

wordcloud2(positive_tokens_count)

negative_tokens_count <- negative_lines_df %>%

anti_join(stopwords) %>%

#filter(chapter == cpt) %>%

group_by(word) %>%

summarise(sum = n()) %>%

filter(sum > 1) %>%

arrange(desc(sum))

wordcloud2(negative_tokens_count)

#----------- 讀取構面特徵關鍵字---------------------------

#setwd("c:/SIDRWEB/DIMS/")

#dimfilename <- "dimfile.csv"

#diminfo <- fread(file=dimfilename,sep = "," ,encoding="UTF-8",headeRFALSE, stringsAsFactors=FALSE)

#dimensions <- diminfo$V1

#------------------Local Testing-------------------------

selfdim_file_name <-'3JDim.xlsx'

#------------------Local Testing-------------------------

library(readxl)

selfdimfile <- read_excel(selfdim_file_name)

for(dim_name in unique(selfdimfile$Dimension)){

x <- subset( selfdimfile[which(selfdimfile$Dimension == dim_name) , 2 ])

#dimtxtname = paste0( selfdim_path,"/",dim_name, ".txt")

dimtxtname = dim_name

write.table(x,

file = dimtxtname,

row.names = FALSE,

col.names = FALSE,

quote = FALSE,

sep = "\t")}

dimensions <- unique(selfdimfile$Dimension)

#------------------ Aspect / Dimensional ?i??-------------------------

#setwd("c:/Koong/csv/DataScience/SERVQ")

sentiment_words_df_words <- sentiment_score_df_sentence %>%

left_join(text_dataset,by= c("line"="line", "sentenceno"="sentenceno"))

sentiment_words_df_words

score_of_dimensions <- NULL

score_of_dimensions <- lapply(dimensions,

FUN= function(x){

dimname <- x

dimname

x <- fread(file=x,encoding="UTF-8",headeRFALSE, stringsAsFactors=FALSE)

colnames(x) <- c("word")

anz_words <- tolower(x$word)

sentiment_DF <- sentiment_words_df_words %>%

filter(word %in% anz_words) %>%

#------------?O?_?????葖??A?p??---------

filter(as.numeric(sentiment) != 0) %>%

#-------------------------------------------

mutate(dimname = dimname) %>%

arrange(line) #%>%

# summarise(sentiment=mean(as.numeric(sentiment)))

# return(summarise(sentiment_DF, sscore =mean(sentiment)))

}

)

sdf <- NULL

for (i in 1:length(score_of_dimensions)) {

sdf <- rbind(as.data.frame(sdf),as.data.frame(score_of_dimensions[[i]]))

}

feature_words_df <-

sdf %>%

left_join(sentiment_line_df_sentences,by=c("line","sentenceno")) %>%

arrange(line)

feature_words_df <- feature_words_df[,-8]

feature_words_df <- feature_words_df[,-6]

#--------------------------------------------------------

score_of_dim <- feature_words_df %>%

filter(as.numeric(sentiment) != 0) %>%

group_by(dimname) %>%

summarise(sentiment=mean(as.numeric(sentiment)))

score_of_dim

#-----------Bar Plot -------------------------------------------------------------

sent.score <- score_of_dim$sentiment

names(sent.score) <- score_of_dim$dimname

colorBlind.8 <- c( orange="#E69F00", skyblue="#56B4E9", bluegreen="#009E73",

yellow="#F0E442", blue="#0072B2", reddish="#D55E00",

purplish="#CC79A7",black="#000099",green="#38D57D",

green01="#B9CC57",yellow01="#FFFF00", PINK="#FF9999")

cols <- colorBlind.8[1:length(sent.score)]

barplot(sent.score, xlab="Dimensions",

ylab="score of sentiment",

col = cols,

cex.axis=0.6,

cex.names = 0.6)

#-----------IPA OLD OK-----------------------------------------------------------------

ipa1 <- NULL

ipa1 <- feature_words_df %>%

group_by(dimname) %>%

summarise(count = n()) %>%

left_join(score_of_dim, by=c("dimname")) %>%

mutate( cmove = count - mean(count) ) %>%

mutate( smove = sentiment - mean(sentiment )) %>%

data.frame()

#----------------------------------------------------------

#--------------------------------------------------------------------

ipa <- ipa1

#--------IPA1 GGplot OK---------------------------------------------------------

empty_theme <- theme(

plot.background = element_blank(),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank(),

axis.line = element_blank(),

axis.ticks = element_blank(),

axis.text.y = element_text(angle = 90))

#setwd("/home/jodytsao/public_html/SDTACD")

#IPAfilename <- paste(currenttime,"IPA.png", sep='')

#png(filename=IPAfilename)

#----------------------------------------------------------

#ggplot(<資料>)+ # 設置畫布

# geom_<幾何圖示類型>( # 畫上幾何圖示

# aes(<座標對應>) #座標對應

# )

#-----------------------------------------------------------

ggplot(ipa, aes(x = smove, y = cmove, label = dimname)) +

#ggplot(ipa, aes(y = cmove, x = smove, label = word)) +

empty_theme +

theme(panel.border = element_rect(colour = "lightgrey", fill=NA, size=1))+

labs(title = "IPA analysis", y = "Counts of Dimension Sentences", x = "Sentiment Score of Dimension") +

geom_vline(xintercept = 0, colour = "lightgrey", size=0.5) +

geom_hline(yintercept = 0, colour = "lightgrey", size=0.5) +

geom_point( size = 0.5)+

geom_label_repel(size = 4, fill = "deepskyblue", colour = "black", min.segment.length = unit(0, "lines"))

plot

#dev.off()

#-----------------------------------------

#----------- all words ------------------

#--------------------------------------------------------------------------------

ipa3 <- NULL

ipa3 <- sentiment_words_df %>%

group_by(word) %>%

summarise(sentiment = mean(sentiment),count = n()) %>%

filter( count > 20) %>%

mutate( cmove = count - mean(count) ) %>%

mutate( smove = sentiment - mean(sentiment )) %>%

data.frame()

#--------------------------------------------------------------------

ipa <- ipa3

#----------------------------------------------------------------------

empty_theme <- theme(

plot.background = element_blank(),

panel.grid.major = element_blank(),

panel.grid.minor = element_blank(),

panel.border = element_blank(),

panel.background = element_blank(),

axis.line = element_blank(),

axis.ticks = element_blank(),

axis.text.y = element_text(angle = 90))

ggplot(ipa, aes(y = cmove, x = smove, label = word)) +

empty_theme +

theme(panel.border = element_rect(colour = "lightgrey", fill=NA, size=1))+

labs(title = "IPA analysis" , x = "Sentiment Score of Word", y = "Counts of Word") +

geom_vline(xintercept = 0, colour = "lightgrey", size=0.5) +

geom_hline(yintercept = 0, colour = "lightgrey", size=0.5) +

geom_text_repel(max.overlaps = Inf)+ #always show all labels even overlap

geom_point( size = 0.5)

20240416

1.策略調色盤之策略選擇分析影片 https://youtube.com/playlist?list=PLYRFygSk3wHB_MFivSr71ug6dO_6uL7IH&si=LEH6YQOO4kx6FMNy

2.策略調色盤之策略選擇分析練習(小組作業)

3.觀看過"1.策略調色盤之策略選擇分析影片"後,試著填答 “市場策略分析策略調色盤_練習.docx"這三個問題 分別以三個集團(ZARA,Tesla, 以及Coca Cola) 為例,根據其旗下的品牌,分別:回答下列問題:

- 請選擇最符合你當前策略的描述 從 ABCDE中選擇一個您的看法

- 請選擇最符合你認為的商業環境的描述。從 FGHIJ中選擇一個您的看法

- 你想要採用的策略是什麼?從 KLMNO選擇一個您的看法

- 上傳小組討論的看法: ex: ZARA: A,F,K Telsa: B,G,L Coca Cola: C,H,M 針對: Netflix :串流平台 自製影片 為例來回答

20240424 對應分析

(1) A Simple Explanation of how Correspondence Analysis Works

Python程式碼(請自行確認是否能運作)

# 匯入所需的函式庫

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.decomposition import PCA

import matplotlib.pyplot as plt

import seaborn as sns

from scipy.stats import chi2_contingency

# 設定工作目錄

import os

os.chdir("D:/Koong/csv/")

# 讀取 csv 檔案

mobile = pd.read_csv("mobile.csv", index_col=0)

# 檢查資料集的前幾列

print(mobile.head())

# 進行卡方檢定

chi2, p, dof, ex = chi2_contingency(mobile)

print(f"Chi-square: {chi2}, p-value: {p}")

# 執行對應分析

from prince import CA

ca = CA(n_components=2)

ca = ca.fit(mobile)

# 獲取特徵值/方差

eig_val = ca.eigenvalues_

print(eig_val)

# 畫 Scree Plot

sns.set(style="whitegrid")

plt.figure(figsize=(10, 6))

plt.bar(range(1, len(eig_val) + 1), eig_val, alpha=0.5, align='center', label='individual explained variance')

plt.step(range(1, len(eig_val) + 1), eig_val.cumsum(), where='mid', label='cumulative explained variance')

plt.ylabel('Explained variance ratio')

plt.xlabel('Principal components')

plt.title('Scree Plot')

plt.axhline(y=33.33, coloR'r', linestyle='--')

plt.legend(loc='best')

plt.show()

# 畫 Biplot

fig, ax = plt.subplots(figsize=(10, 8))

# 取得行與列的座標

row_coords = ca.row_coordinates(mobile)

col_coords = ca.column_coordinates(mobile)

# 繪製行的點

ax.scatter(row_coords[0], row_coords[1], alpha=0.5)

for i, txt in enumerate(row_coords.index):

ax.annotate(txt, (row_coords[0][i], row_coords[1][i]), alpha=0.75)

# 繪製列的點

ax.scatter(col_coords[0], col_coords[1], alpha=0.5, coloR'red')

for i, txt in enumerate(col_coords.index):

ax.annotate(txt, (col_coords[0][i], col_coords[1][i]), alpha=0.75, coloR'red')

ax.set_xlabel('Dimension 1')

ax.set_ylabel('Dimension 2')

ax.set_title('Correspondence Analysis Biplot')

plt.grid()

plt.show()

# 繪製行的貢獻圖

row_contrib = ca.row_contributions(mobile)

top_contrib1 = row_contrib[0].nlargest(10)

top_contrib2 = row_contrib[1].nlargest(10)

plt.figure(figsize=(10, 6))

sns.barplot(x=top_contrib1.values, y=top_contrib1.index, palette="viridis")

plt.title('Top 10 Contributions of Rows to Dimension 1')

plt.xlabel('Contribution')

plt.ylabel('Rows')

plt.show()

plt.figure(figsize=(10, 6))

sns.barplot(x=top_contrib2.values, y=top_contrib2.index, palette="viridis")

plt.title('Top 10 Contributions of Rows to Dimension 2')

plt.xlabel('Contribution')

plt.ylabel('Rows')

plt.show()

# 繪製行的顏色根據貢獻度

plt.figure(figsize=(10, 8))

sns.scatterplot(x=row_coords[0], y=row_coords[1], hue=row_contrib[0] + row_contrib[1], palette="viridis", legend=False)

for i, txt in enumerate(row_coords.index):

plt.annotate(txt, (row_coords[0][i], row_coords[1][i]), alpha=0.75)

plt.xlabel('Dimension 1')

plt.ylabel('Dimension 2')

plt.title('Correspondence Analysis Biplot (Rows Colored by Contribution)')

plt.grid()

plt.show()

R 語言程式碼

library("FactoMineR")

library("factoextra")

setwd("D:/Koong/csv/")

mobile <- read.csv("mobile.csv", stringsAsFactors = TRUE)

colnames(mobile) <- c(NA,"Screen","Price","Design","Battery","Software","Camera")

he

ad(mobile)

rownames(mobile) <- mobile[,1]

mobile <- mobile[,-1]

#the association is highly significant (chi-square: 1944.456, p = 0).

chisq <- chisq.test(mobile)

chisq

#---------- compute CA ------------------

res.ca <- CA(mobile, graph = FALSE)

print(res.ca)

#Extract the eigenvalues/variances retained by each dimension (axis)

eig.val <- get_eigenvalue(res.ca)

eig.val

fviz_screeplot(res.ca, addlabels = TRUE, ylim = c(0, 50))

#------------ Biplot -------------------------------------

fviz_screeplot(res.ca) +

geom_hline(yintercept=33.33, linetype=2, coloR"red")

# repel= TRUE to avoid text overlapping (slow if many point)

fviz_ca_biplot(res.ca, repel = TRUE)

#----------------------------------------------

fviz_ca_row(res.ca, col.row = "cos2",

gradient.cols = c("#00AFBB", "#E7B800", "#FC4E07"),

repel = TRUE)

# Contributions of rows to dimension 1

fviz_contrib(res.ca, choice = "row", axes = 1, top = 10)

# Contributions of rows to dimension 2

fviz_contrib(res.ca, choice = "row", axes = 2, top = 10)

#--------------------------------------------------------

fviz_ca_row(res.ca, col.row = "contrib",

gradient.cols = c("#00AFBB", "#E7B800", "#FC4E07"),

repel = TRUE)

20240430

Clustering Analysis / Segmentation

1.人口統計: USCity.xlsx / ClusterMean0501.R -> USCities.cvs

2.RFM分析: onlinestore1220.xlsx /ClusterSegment_0501.R onlinestore1220.csv

Cluster_K-Means R語言程式碼

library(cluster)

library(factoextra)

#

setwd("/home/jodytsao/DataScienceMarketing/csv/")

USCity <- read.csv("USCities.csv")

res.data01 <- USCity[3:8]

USCityData <- as.data.frame(scale(res.data01))

#--------------------------------------------------------------------------------

for(n_cluster in 2:8){

cluster <- kmeans(USCityData, n_cluster)

silhouetteScore <- mean(

silhouette(

cluster$cluster,

dist(USCityData, method = "euclidean")

)[,3]

)

print(sprintf('Silhouette Score for %i Clusters: %0.4f', n_cluster, silhouetteScore))

}

#---------------------------------------------------------------------------------

# for reproducibility

set.seed(123)

# Visualize

USCityDataClusterData <- kmeans(USCityData, 4)

#### 3. Customer Segmentation via K-Means Clustering ####

# cluster centers

USCityDataClusterData$centers

# cluster labels

USCityCluster <- USCity %>%

mutate(ClusteRUSCityDataClusterData$cluster)

USCityCluster %>% group_by(Cluster) %>% summarise(Count=n())

# High value cluster summary

summary(USCityCluster [which(USCityCluster $Cluster == 4),])

USCityCluster[which(USCityCluster$CityNo %in% which(USCityCluster$Cluster == 3)),] %>%

group_by(City)

USCityCluster[which(USCityCluster$CityNo %in% which(USCityCluster$Cluster == 1)),] %>%

group_by(City)

USCityCluster[which(USCityCluster$CityNo %in% which(USCityCluster$Cluster == 2)),] %>%

group_by(City)

USCityCluster[which(USCityCluster$CityNo %in% which(USCityCluster$Cluster == 4)),] %>%

group_by(City)

Customer Segmentation R語言程式碼

library(dplyr)

library(ggplot2)

library(cluster)

#### 1. Load Data ####

setwd("/home/jodytsao/DataScienceMarketing/")

df1=read.csv("csv/OnlineStore1220.csv",headeRTRUE,stringsAsFactors = TRUE)

res.data01 <- df1[3:5]

normalizedDF <- as.data.frame(scale(res.data01))

#### 3. Customer Segmentation via K-Means Clustering ####

cluster <- kmeans(normalizedDF, 4)

# cluster centers

cluster$centers

# cluster labels

# cluster labels

df2 <- df1 %>%

mutate(ClusteRcluster$cluster)

#df2 %>% group_by(Cluster) %>% summarise(Count=n())

df2[df2$Cluster == 1,] %>%

group_by(CustomerID)

#---------------------------------------

# Selecting the best number of cluster

#---------------------------------------

for(n_cluster in 2:8){

cluster <- kmeans(normalizedDF[c("TotalSales", "OrderCount", "AvgOrderValue")], n_cluster)

silhouetteScore <- mean(

silhouette(

cluster$cluster,

dist(normalizedDF[c("TotalSales", "OrderCount", "AvgOrderValue")], method = "euclidean")

)[,3]

)

print(sprintf('Silhouette Score for %i Clusters: %0.4f', n_cluster, silhouetteScore))

}

#---------------------------------------

# Selecting the best number of cluster

#---------------------------------------

Cluster_K-Means Python程式碼(請自行確認是否能運作)

# 匯入所需的函式庫

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

import numpy as np

# 設定工作目錄

import os

os.chdir("/home/jodytsao/DataScienceMarketing/csv/")

# 讀取 csv 檔案

USCity = pd.read_csv("USCities.csv")

# 擷取所需的資料欄位並進行標準化

res_data01 = USCity.iloc[:, 2:8]

scaler = StandardScaler()

USCityData = scaler.fit_transform(res_data01)

# 計算不同群數的輪廓係數 (Silhouette Score)

for n_cluster in range(2, 9):

kmeans = KMeans(n_clusters=n_cluster, random_state=0)

cluster_labels = kmeans.fit_predict(USCityData)

silhouette_avg = silhouette_score(USCityData, cluster_labels)

print(f'Silhouette Score for {n_cluster} Clusters: {silhouette_avg:.4f}')

# 設置隨機種子以確保結果可重現

np.random.seed(123)

# 進行 K-means 聚類,使用 4 個群

kmeans = KMeans(n_clusters=4, random_state=0)

USCityDataClusterData = kmeans.fit(USCityData)

# 聚類中心

print(USCityDataClusterData.cluster_centers_)

# 加入群集標籤到原始資料中

USCity['Cluster'] = USCityDataClusterData.labels_

# 每個群集的數量統計

print(USCity.groupby('Cluster').size())

# 高價值群集的摘要統計

print(USCity[USCity['Cluster'] == 3].describe())

# 各群集內的城市名稱統計

for cluster_num in range(4):

cities_in_cluster = USCity[USCity['Cluster'] == cluster_num]['City']

print(f'Cities in Cluster {cluster_num}:')

print(cities_in_cluster)

Customer Segmentation Python程式碼(請自行確認是否能運作)

# 匯入所需的函式庫

import pandas as pd

from sklearn.preprocessing import StandardScaler

from sklearn.cluster import KMeans

from sklearn.metrics import silhouette_score

import numpy as np

# 設定工作目錄

import os

os.chdir("/home/jodytsao/DataScienceMarketing/")

# 讀取 csv 檔案

df1 = pd.read_csv("csv/OnlineStore1220.csv")

# 擷取所需的資料欄位並進行標準化

res_data01 = df1.iloc[:, 2:5]

scaler = StandardScaler()

normalizedDF = pd.DataFrame(scaler.fit_transform(res_data01), columns=res_data01.columns)

# 進行 K-means 聚類,使用 4 個群

kmeans = KMeans(n_clusters=4, random_state=0)

kmeans.fit(normalizedDF)

# 聚類中心

print(kmeans.cluster_centers_)

# 加入群集標籤到原始資料中

df1['Cluster'] = kmeans.labels_

# 每個群集的數量統計

print(df1.groupby('Cluster').size())

# 群集 1 中的客戶統計

print(df1[df1['Cluster'] == 1].groupby('CustomerID').size())

# 計算不同群數的輪廓係數 (Silhouette Score)

for n_cluster in range(2, 9):

kmeans = KMeans(n_clusters=n_cluster, random_state=0)

cluster_labels = kmeans.fit_predict(normalizedDF[['TotalSales', 'OrderCount', 'AvgOrderValue']])

silhouette_avg = silhouette_score(normalizedDF[['TotalSales', 'OrderCount', 'AvgOrderValue']], cluster_labels)

print(f'Silhouette Score for {n_cluster} Clusters: {silhouette_avg:.4f}')

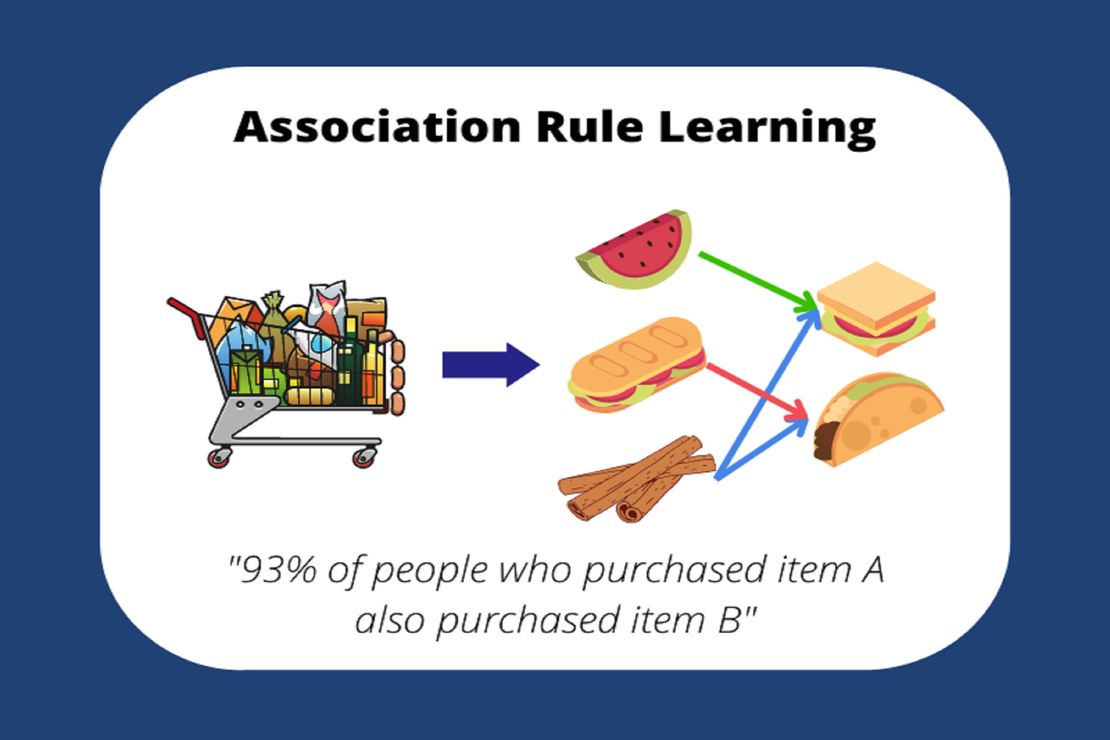

20240508 Association Rule (關聯法則 啤酒與尿布)

指令一:

請你記住以下四個構面和定義,以協助後面歸納,分別是:

- Experience:遊客在旅行中所感受到的一切,這個詞能夠綜合表達旅行帶來的各種感受和體驗,以及對這些體驗的總體評價。

- Fulfillment:遊客對旅行的意義和滿足感,反映他們是否認為旅行有意義,以及是否從中獲得了個人滿足感。

- Discovery:在旅行中遊客所獲得的新發現和學習,包括對自己的認識、新獲取的信息以及對新體驗的興奮感。

- Unique:旅程中的獨特性,表明旅行是一次千載難逢的體驗,或者是一次能夠近距離體驗當地文化的機會。

再來,我有四個規則要請你遵守:

- 歸納的時候,請幫我依據詞意的「相近性」進行分類 2.一個字只會被歸類到一個類別中。 3.只能出現我有給你的字,請勿加入其他在網路上搜到的字。

請根據上述規則,把下列的字詞進行歸納

指令二(接續指令一的結果):

構面Experience的定義為:遊客在旅行中所感受到的一切,這個詞能夠綜合表達旅行帶來的各種感受和體驗,以及對這些體驗的總體評價。 請從下列187個單字中(指令一歸納在Experience的字詞),擷取出20個最適合用來衡量這個構面的單詞:

構面Fulfillment的定義為:遊客對旅行的意義和滿足感,反映他們是否認為旅行有意義,以及是否從中獲得了個人滿足感。 請從下列22個單字中(指令一歸納在Fulfillment的字詞),萃取出20個最適合用來衡量這個構面的單詞:

構面Discovery的定義為:在旅行中遊客所獲得的新發現和學習,包括對自己的認識、新獲取的信息以及對新體驗的興奮感。 請從下列103個單字中(指令一歸納在Discovery的字詞),萃取出20個最適合用來衡量這個構面的單詞:

構面Unique的定義為:旅程中的獨特性,表明旅行是一次千載難逢的體驗,或者是一次能夠近距離體驗當地文化的機會。 請從下列164個單字中(指令一歸納在Unique的字詞),萃取出20個最適合用來衡量這個構面的單詞:

Raddit Traveling to Taiwan Posts

Prompt 1.

Assumed you are a academic researhcer, based on the definition of Experience, Fulfillment, Discovery, and Uniquie as the following:

(1) Experience It refers to travelers’ mindset and experiences of seeking joy and happiness during their journey. This dimension highlights travelers’ emphasis on the diversity of experiences and exploration while pursuing pleasure and enjoyment, as well as the positive emotions and satisfaction gained during the journey.”

(2)Fulfillment It refers to the degree to which travelers find profound meaning in the overall journey, reflecting a desire to seek spiritual fulfillment and personal growth during the trip. This dimension encompasses travelers’ pursuit of pleasure and novelty while also focusing on the deeper significance of travel, such as respect for culture, engagement in meaningful activities, and the pursuit of personal development.

(3)Discovery It refers to the various knowledge and information gained by travelers during the journey. This dimension highlights the importance travelers place on seeking knowledge and learning during their travels, viewing travel as a pathway to enriching life experiences.

(4)Unique It refers to the sense of novelty that travelers seek, experience, and cherish during their journey. This dimension emphasizes travelers’ pursuit of diverse, unique, and captivating experiences, as well as the pleasure and fulfillment derived from these experiences.

Could you categorize the following sentences, I will give you later, from consumer reviews based on the definition of dimensions above of Experience, Fulfillment, Discovery, and Unique.

2.Assumed you are a academic researcher, based on the definition of Experience, Fulfillment, Discovery, and Uniquie as the following:

(1) Experience It refers to travelers’ mindset and experiences of seeking joy and happiness during their journey. This dimension highlights travelers’ emphasis on the diversity of experiences and exploration while pursuing pleasure and enjoyment, as well as the positive emotions and satisfaction gained during the journey."

(2)Fulfillment It refers to the degree to which travelers find profound meaning in the overall journey, reflecting a desire to seek spiritual fulfillment and personal growth during the trip. This dimension encompasses travelers’ pursuit of pleasure and novelty while also focusing on the deeper significance of travel, such as respect for culture, engagement in meaningful activities, and the pursuit of personal development.

(3)Discovery It refers to the various knowledge and information gained by travelers during the journey. This dimension highlights the importance travelers place on seeking knowledge and learning during their travels, viewing travel as a pathway to enriching life experiences.

(4)Unique It refers to the sense of novelty that travelers seek, experience, and cherish during their journey. This dimension emphasizes travelers’ pursuit of diverse, unique, and captivating experiences, as well as the pleasure and fulfillment derived from these experiences.

Could you extract keywords from the following sentences of consumer reviews , I will give you later, based on the definition of dimensions above of Experience, Fulfillment, Discovery, and Unique.

關聯法則 R語言程式碼

# 關聯法則 R語言程式碼

# Installing Packages

# Loading package

#Sys.setenv(JAVA_HOME='C:\\Program Files\\Java\\jre1.8.0_291')

library(arules)

library(arulesViz)

library(xlsx)

setwd("c:/Koong/csv/DataScience")

beeRread.xlsx("beerAR.xlsx",headeRTRUE,sheetIndex=1)

dataset=as.matrix(beer)

# Structure

str(dataset)

set.seed = 220 # Setting seed

associa_rules = apriori(data = dataset,

parameter = list(support = 0.3,

confidence = 0.5))

#--------------------------------------------------------

rules <- associa_rules[size(lhs(associa_rules)) > 0]

#--------------------------------------------------------

# Plot

#itemFrequencyPlot(dataset, topN = 5)

# Visualising the results

inspect(sort(rules, by = 'confidence'))

plot(rules, method = "graph",

measure = "confidence", shading = "lift")

關聯法則 Python程式碼(請自行確認是否能運作)

# 匯入所需的函式庫

import pandas as pd

from mlxtend.frequent_patterns import apriori, association_rules

import matplotlib.pyplot as plt

from openpyxl import load_workbook

# 設定工作目錄

import os

os.chdir("c:/Koong/csv/DataScience")

# 讀取 Excel 檔案

beer = pd.read_excel("beerAR.xlsx", sheet_name=0)

# 查看資料結構

print(beer.head())

# 設定隨機種子

np.random.seed(220)

# 使用 apriori 算法進行關聯規則挖掘

frequent_itemsets = apriori(beer, min_support=0.3, use_colnames=True)

# 計算關聯規則

rules = association_rules(frequent_itemsets, metric="confidence", min_threshold=0.5)

# 過濾規則,僅保留左手邊 (lhs) 非空的規則

rules = rules[rules['antecedents'].apply(lambda x: len(x) > 0)]

# 依據信心度排序並顯示規則

sorted_rules = rules.sort_values(by='confidence', ascending=False)

print(sorted_rules)

# 繪製規則

import networkx as nx

import matplotlib.pyplot as plt

def plot_association_rules(rules):

G = nx.DiGraph()

for _, rule in rules.iterrows():

for antecedent in rule['antecedents']:

for consequent in rule['consequents']:

G.add_edge(antecedent, consequent, weight=rule['confidence'])

pos = nx.spring_layout(G)

plt.figure(figsize=(12, 8))

nx.draw(G, pos, with_labels=True, node_size=3000, node_coloR"skyblue", font_size=10, font_coloR"black", font_weight="bold", edge_coloR"grey")

plt.title("Association Rules Graph")

plt.show()

plot_association_rules(sorted_rules)

20240511 Decision Tree

Azure Machine Learning Studio (https://studio.azureml.net) (大數據分析_2023.pptx) CsutomerLifetimeValue DataSet (CustomerValueClean.csv)

Decision Tree R語言程式碼

library(C50)

library(gmodels)

library(party)

library(RColorBrewer)

require(caret)

require(e1071)

setwd("c://Koong//csv//DataScience//DSMK")

credit <- read.csv("CustomerValueClean.csv")

#============== 產生 訓練資料及測試資料集 =================

# Create the training and test datasets

set.seed(100)

# Step 1: Get row numbers for the training data

trainRowNumbers <- createDataPartition(credit$Response, p=0.7, list=FALSE)

# Step 2: Create the training dataset

trainData <- credit[trainRowNumbers,]

# Step 3: Create the test dataset

testData <- credit[-trainRowNumbers,]

# Store X (自變數) and Y (依變數)for later use.

x = trainData[, -3]

y = trainData$Response

#--------------------------------------------------------------------

#我們避免模型過度擬合(overfitting),故要利用K-fold Cross-Validation#

#的方法進行交叉驗證,我們使用caret這個套件,而K先設定為10次~

#---------------------------------- rpart + CARET ----------------

modelLookup("rpart")

train_control <- trainControl(method="cv", numbeR3)

train_control.model <- train(Response~.,

data=trainData,

method="rpart",

trControl=train_control,

tuneLength = 6)

train_control.model

#--------- Prediction ---------------------------------

pred <- predict(train_control.model, newdata=testData)

# 用table看預測的情況

table(real=testData$Response, predict=pred)

# 計算預測準確率 = 對角線的數量/總數量

confus.matrix <- table(real=testData$Response, predict=pred)

sum(diag(confus.matrix))/sum(confus.matrix) # 對角線的數量/總數量

library(rattle)

fancyRpartPlot(train_control.model$finalModel)

#---------------------------- Advance CARET -------------------

#names(getModelInfo())

#modelLookup("ctree2")

#---------------------------------- ctree2 + CARET ----------------

library(party)

set.seed(123)

train_control.model <- train(

Response~., data = trainData, method = "ctree2",

trControl = trainControl("cv", number = 5),

tuneGrid = expand.grid(maxdepth =3, mincriterion = 0.95 )

#tuneLength = 6,

)

plot(train_control.model$finalModel)

#--------- Prediction ---------------------------------

pred <- predict(train_control.model, newdata=testData)

# 用table看預測的情況

table(real=testData$Response, predict=pred)

# 計算預測準確率 = 對角線的數量/總數量

confus.matrix <- table(real=testData$Response, predict=pred)

sum(diag(confus.matrix))/sum(confus.matrix) # 對角線的數量/總數量

Decision Tree Python程式碼(請自行確認是否能運作)

# 匯入所需的函式庫

import pandas as pd

import numpy as np

from sklearn.model_selection import train_test_split, cross_val_score, GridSearchCV

from sklearn.tree import DecisionTreeClassifier

from sklearn.metrics import confusion_matrix, accuracy_score

from sklearn.ensemble import RandomForestClassifier

import matplotlib.pyplot as plt

import seaborn as sns

import graphviz

from sklearn.tree import export_graphviz

from sklearn.tree import plot_tree

# 設定工作目錄

import os

os.chdir("c://Koong//csv//DataScience//DSMK")

# 讀取 csv 檔案

credit = pd.read_csv("CustomerValueClean.csv")

# 設定隨機種子

np.random.seed(100)

# 分割訓練資料與測試資料

trainData, testData = train_test_split(credit, test_size=0.3, stratify=credit['Response'], random_state=100)

# 提取自變數 (X) 和依變數 (y)

X_train = trainData.drop(columns=['Response'])

y_train = trainData['Response']

X_test = testData.drop(columns=['Response'])

y_test = testData['Response']

# 使用 K-fold 交叉驗證方法避免過度擬合 (overfitting)

# 使用決策樹進行分類

dt_model = DecisionTreeClassifier(random_state=100)

param_grid = {'max_depth': range(1, 10), 'min_samples_split': range(2, 10)}

# 設置交叉驗證

cv = GridSearchCV(dt_model, param_grid, cv=3)

cv.fit(X_train, y_train)

# 最佳模型

best_model = cv.best_estimator_

print(best_model)

# 預測

y_pred = best_model.predict(X_test)

# 用混淆矩陣查看預測結果

conf_matrix = confusion_matrix(y_test, y_pred)

print(conf_matrix)

# 計算準確率

accuracy = accuracy_score(y_test, y_pred)

print(f"Accuracy: {accuracy:.4f}")

# 繪製決策樹

plt.figure(figsize=(20,10))

plot_tree(best_model, feature_names=X_train.columns, class_names=['0', '1'], filled=True)

plt.show()

# 使用更進階的決策樹模型 (ctree2)

rf_model = RandomForestClassifier(random_state=123, max_depth=3, min_samples_split=2)

rf_model.fit(X_train, y_train)

# 預測

y_pred_rf = rf_model.predict(X_test)

# 用混淆矩陣查看預測結果

conf_matrix_rf = confusion_matrix(y_test, y_pred_rf)

print(conf_matrix_rf)

# 計算準確率

accuracy_rf = accuracy_score(y_test, y_pred_rf)

print(f"Accuracy (Random Forest): {accuracy_rf:.4f}")

20240605 SEO

流量學SEO(06/05) SEO搜尋引擎最佳化 0.Google Search Engin 個案討論 1.SEO搜尋引擎最佳化實務驟 2.SEO 關鍵字工作表 4.目標關鍵字